In the ever-evolving digital landscape, understanding customer sentiment has become a vital asset for businesses. Sentiment analysis — interpreting and classifying emotions expressed in text — empowers companies to make data-driven decisions, enhance customer experiences, and stay ahead of the competition. However, relying on external API providers for sentiment analysis can be costly and may introduce dependencies on network connectivity and third-party services.

What if you could perform sentiment analysis in-house, running a powerful yet lightweight model locally? In this blog post, we'll explore how fine-tuning a pre-trained model can provide an efficient, cost-effective solution for sentiment analysis without the need for extensive computational resources or reliance on external APIs.

Benefits of Local Fine-Tuned Models

Cost Efficiency

Running a sentiment analysis model locally eliminates recurring fees associated with external APIs, offering a sustainable solution for businesses processing large volumes of data.

Customization

Fine-tuning allows the model to adapt to the specific language and nuances of your domain, improving accuracy over generic models provided by external services.

Independence and Data Privacy

Hosting the model in-house gives you complete control over its operation and data. This enhances security, ensures compliance with data protection regulations, and frees you from the availability and policy constraints of third-party providers.

Choosing the Right Model: DistilBERT

To implement an efficient local solution, we selected DistilBERT—a distilled version of Google's BERT (Bidirectional Encoder Representations from Transformers) model. DistilBERT offers over 95% of BERT's performance while being 40% smaller and 60% faster, making it ideal for deployment on machines without powerful GPUs. Its reduced size and faster inference times make it resource-friendly, and it can be easily fine-tuned for specific tasks like sentiment analysis.

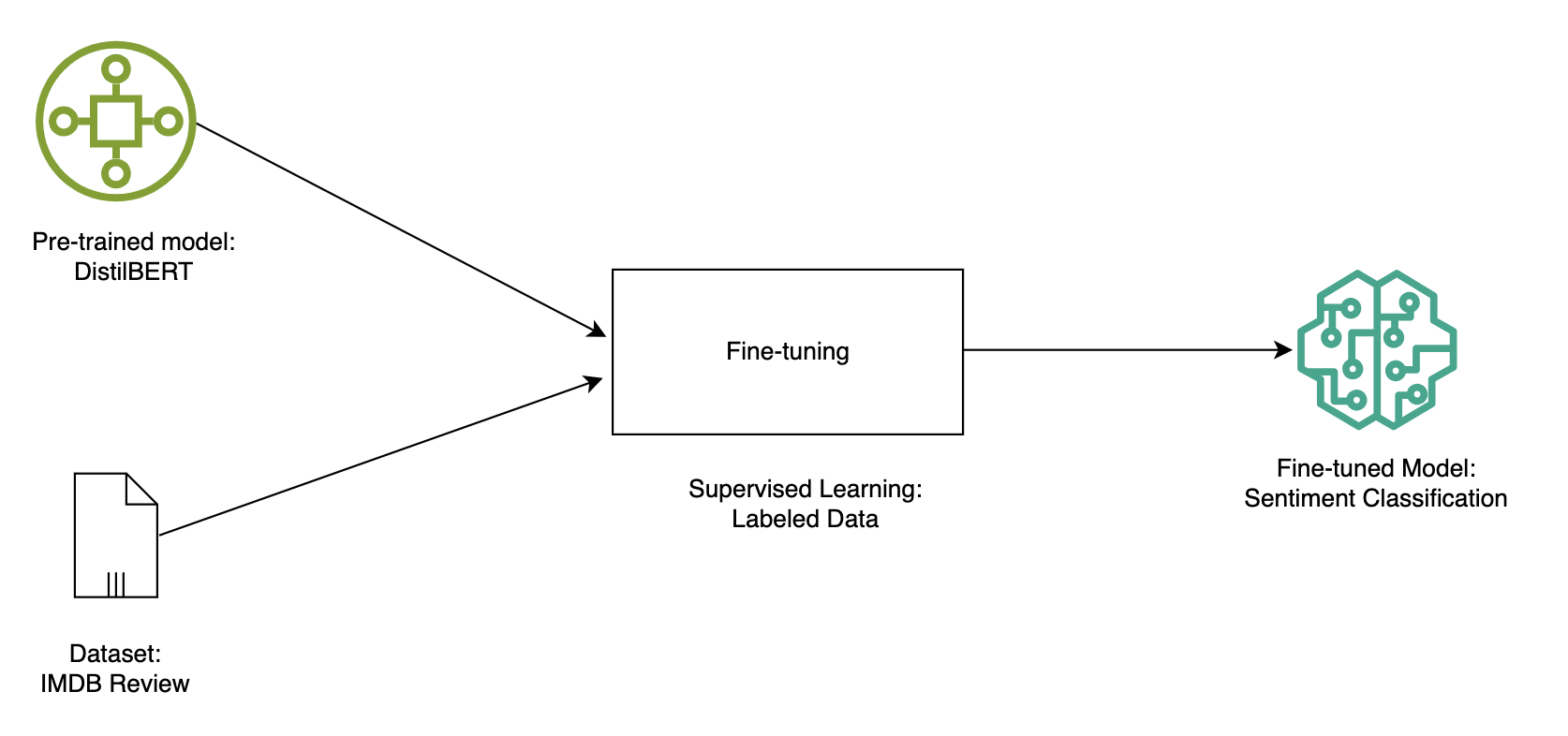

The Fine-Tuning Process

Fine-tuning a pre-trained model like DistilBERT tailors it to your specific task — in this case, sentiment analysis on customer reviews.

Data Preparation

We utilized the IMDb dataset, a collection of 50,000 movie reviews labeled as positive or negative. This dataset provides a robust foundation for training the model to recognize sentiment in text.

Overcoming Computational Challenges

Fine-tuning requires substantial computational resources, which may not be available on a standard local machine. To address this, we used Google Colab — a cloud-based platform offering access to powerful GPUs. While the free tier has limitations, we opted for a paid plan to ensure sufficient resources and avoid session interruptions.

Training and Validation

Using Python and the PyTorch framework, we fine-tuned DistilBERT on the IMDb dataset. The process involved:

- Adjusting the Model: Adding a classification layer to enable sentiment prediction.

- Training: Running the model through the dataset to learn patterns associated with positive and negative sentiments.

- Validation: Testing the model on unseen data to assess its performance. Our model achieved over 90% accuracy.

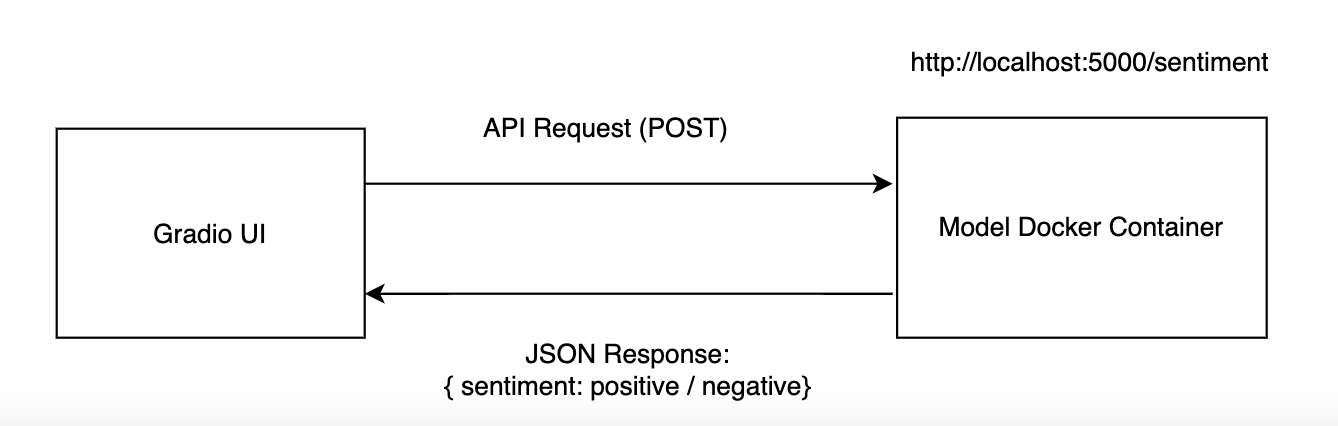

Deployment and Application

With the fine-tuned model ready, the next step was deployment.

Containerization with Docker

We containerized the model using Docker to ensure consistent performance across different environments. This approach simplifies deployment and scaling, making it easier to integrate the model into various applications.

Integrating into Your Workflow

The model can be accessed via an API, allowing it to be incorporated into existing systems seamlessly. Whether it's a customer feedback platform, a social media monitoring tool, or an internal analytics dashboard, the model provides real-time sentiment analysis without external dependencies.

Potential Business Applications

The versatility of a fine-tuned sentiment analysis model extends to various use cases:

- Customer Support: Analyze support tickets or chat logs to prioritize negative feedback and respond promptly.

- Market Research: Monitor social media and reviews to gauge public opinion on products or campaigns.

Conclusion

Fine-tuning models like DistilBERT enables businesses to leverage advanced natural language processing locally, without the need for complex training or external services. This cost-effective and secure approach enhances data privacy and adaptability, helping organizations stay competitive in the digital age.

Explore the Code

If you're interested in the technical details, feel free to check out the open-source code on the GitHub repository